API 요청

등록된 모델에 요청을 하고 응답을 받는 과정을 설명합니다.

API 환경

엘리스AI클라우드에서 배포된 모델은 BentoML 기반으로 실행되며, 다음과 같은 API 엔드포인트를 제공합니다.

BentoML API 엔드포인트

- 사용자 지정 API

- BentoML service 내 정의된 API 를 통해 추론을 진행하고 결과를 반환합니다.

/livez- Kubernetes를 위한 헬스 체크 엔드포인트로, 정상적인 경우 200 OK 상태 코드로 응답합니다.

/readyz/readyz엔드포인트에서 200 OK 상태가 반환되면 서비스가 트래픽을 수용할 준비가 되었음을 나타냅니다. 그 시점부터 Kubernetes는/livez엔드포인트를 사용하여 주기적인 상태 점검을 수행합니다.

/metrics- Prometheus 메트릭 엔드포인트로,

/metrics의 반환 값을 통해 Prometheus 는 서비스의 메트릭을 수집하는 데 사용할 수 있습니다.

- Prometheus 메트릭 엔드포인트로,

API 요청 인증

모든 ML API 호출은 API Key 인증이 필수입니다. API Key가 포함되지 않은 요청은 승인되지 않은 요청으로 간주되어 처리되지 않습니다.

API Key 발급 및 관리 방법은 API Key 관리 문서를 확인해주시기 바랍니다.

API URL 확인

API 요청에 사용할 기본 URL은 다음 위치에서 확인할 수 있습니다.

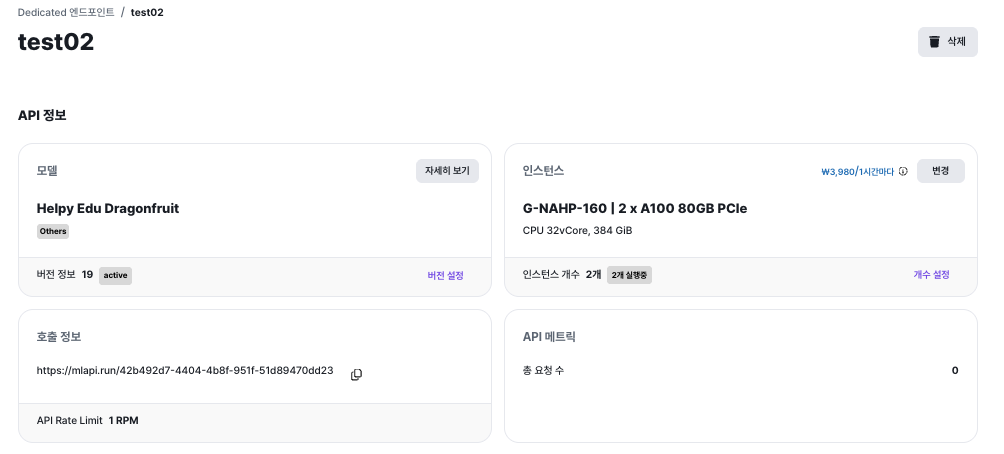

- (Dedicated의 경우) Dedicated 엔드포인트 페이지에서 조회하고자하는 API를 선택해 상세 페이지 진입

- API 정보 영역에서 호출 정보 확인

BentoML API 요청 예제

API URL 뒤에 BentoML 엔드포인트 경로를 추가하여 요청을 수행할 수 있습니다. 아래에서 몇가지 예시를 설명합니다.

헬스 체크 요청 (/readyz)

-

API URL 뒤에

/readyz을 추가하여 요청합니다.https://api-cloud-function.elice.io/3ed3b4e7-46cb-459f-abd9-e30680e8b11f/readyz

추론 요청 (사용자 정의 엔드포인트)

-

사용자 지정 API 엔드포인트 경로를 추가하여 요청합니다.

https://api-cloud-function.elice.io/3ed3b4e7-46cb-459f-abd9-e30680e8b11f/generate -

만약

curl명령을 통해 요청한다면 아래와 같은 형태가 될 것 입니다.curl -X 'POST' \

'https://api-cloud-function.elice.io/3ed3b4e7-46cb-459f-abd9-e30680e8b11f/generate' \

-H 'accept: image/*' \

-H 'Content-Type: application/json' \

-H 'Authorization: Bearer {{api_key}}' \

-d '{

"prompt": "a tiny astronaut hatching from an egg on the moon",

"height": 512,

"width": 512,

"num_inference_steps": 4,

"guidance_scale": 0

}'